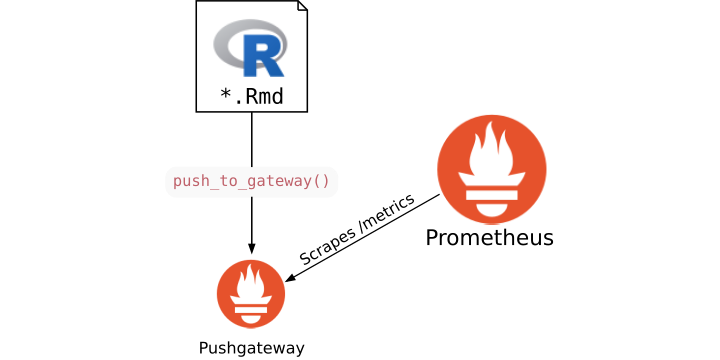

The openmetrics R package now supports pushing metrics to a Prometheus Pushgateway instance, which is useful for short-lived batch scripts or RMarkdown reports.

You might want to expose metrics from these scripts or reports to Prometheus in order to improve monitoring and alerting on failures, but many of these processes are not around long enough to run a webserver that Prometheus can pull from.

This is where the Pushgateway comes in. It allows you to push metrics to a centralised location where they can be aggregated and then scraped by Prometheus itself. But beware: there are a limited number of use cases for pushing metrics, and you should always prefer pull-based methods when possible.

Instrumenting an R ETL Job

Often R users find themselves writing an R script (or RMarkdown report) that runs regularly, pulling data from a database and creating a new dataset for further reporting, visualisation or modelling. This is the “extract, transform, load” (ETL) pattern.

There are two natural metrics that we might want to expose from such a script to Prometheus:

- Whether or not the ETL succeeded; and

- How long it took.

For example:

success <- gauge_metric(

"etl_outcome", "Whether or not the ETL process succeeded."

)

duration <- gauge_metric(

"etl_duration_seconds", "Total running time of the ETL process."

)

We might also add more granular timing metrics, parcelling out the database pull (so it could be tracked over time), and more domain-specific metrics (such as the number of users in the resulting dataset):

db_pull_duration <- gauge_metric(

"etl_db_pull_duration_seconds",

"Total time spent pulling data into the ETL process."

)

users <- gauge_metric(

"etl_users_processed", "Number of unique users processed by the ETL."

)

These are all gauges, because we are not taking repeated measures and none of them are expected to increase monotonically.

Actually using these metrics in an ETL script might look as follows:

start <- Sys.time()

elapsed <- function() {

unclass(difftime(Sys.time(), start, units = "secs"))

}

df <- pull_from_db()

db_pull_duration$set(elapsed())

clean <- process_raw_data(df)

write_cleaned_data()

users$set(length(unique(clean$user_id)))

success$set(1)

duration$set(elapsed())

These metrics can then be pushed to a Pushgateway instance at the end of the script:

push_to_gateway("http://localhost:9091", job = "etl-job-1")

You can also use on.exit() to ensure metrics are pushed even if the script

fails.

If you have parameterised scripts or reports (say, running one job for each language group of your users), you can pass these parameters through as labels on the job in Pushgateway:

push_to_gateway("http://localhost:9091", job = "etl-job-1", lang = "en")

These metrics would look like the following when rendered:

# HELP etl_outcome Whether or not the ETL process succeeded.

# TYPE etl_outcome gauge

etl_outcome 1

# HELP etl_duration_seconds Total running time of the ETL process.

# TYPE etl_duration_seconds gauge

etl_duration_seconds 1.21224451065063

# HELP etl_db_pull_duration_seconds Total time spent pulling data into the ETL process.

# TYPE etl_db_pull_duration_seconds gauge

etl_db_pull_duration_seconds 1.18743181228638

# HELP etl_users_processed Number of unique users processed by the ETL.

# TYPE etl_users_processed gauge

etl_users_processed 100

# EOF

Bonus: Timing Chunks with knitr Hooks

The knitr package used to render RMarkdown reports sports a feature called hooks that can be used to run code before and after a chunk is executed. This can be used to time the chunk without cluttering the code with metric-related boilerplate.

To do this, we need to define a hook in the RMarkdown document:

knit_hooks$set(duration_metric = function(before, options, envir) {

if (before) {

envir$.start <- Sys.time()

} else {

if (exists(options$duration_metric, envir = envir)) {

elapsed <- unclass(difftime(Sys.time(), envir$.start, units = "secs"))

envir[[options$duration_metric]]$set(elapsed)

} else {

stop("No object matching duration_metric found.")

}

}

})

And then use it as follows:

```{r chunk-1, duration_metric = "db_pull_duration"}

df <- pull_from_db()

```